Introduction

In this blog, I'm going to point out one error that I used to encounter very often while scheduling DAGs and its solution, this blog will also give you a little insight into DAGs and Airflow.

Note:- I have installed Airflow through Docker Images, I'm using Apache Airflow 2.5.0 version so this solution should be working perfectly for 2.1.1+ versions of apache airflow, not sure for versions older than 2.1.1.

Apache Airflow

Apache Airflow is an open-source platform for developing, scheduling, and monitoring batch-oriented workflows. Airflow's extensible Python framework enables you to build workflows connecting with virtually any technology. A web interface helps manage the state of your workflows.

DAGs

DAGs. In Airflow, a DAG – or a Directed Acyclic Graph – is a collection of all the tasks you want to run, organized in a way that reflects their relationships and dependencies. A DAG is defined in a Python script, which represents the DAGs structure (tasks and their dependencies) as code.

Broken DAGs

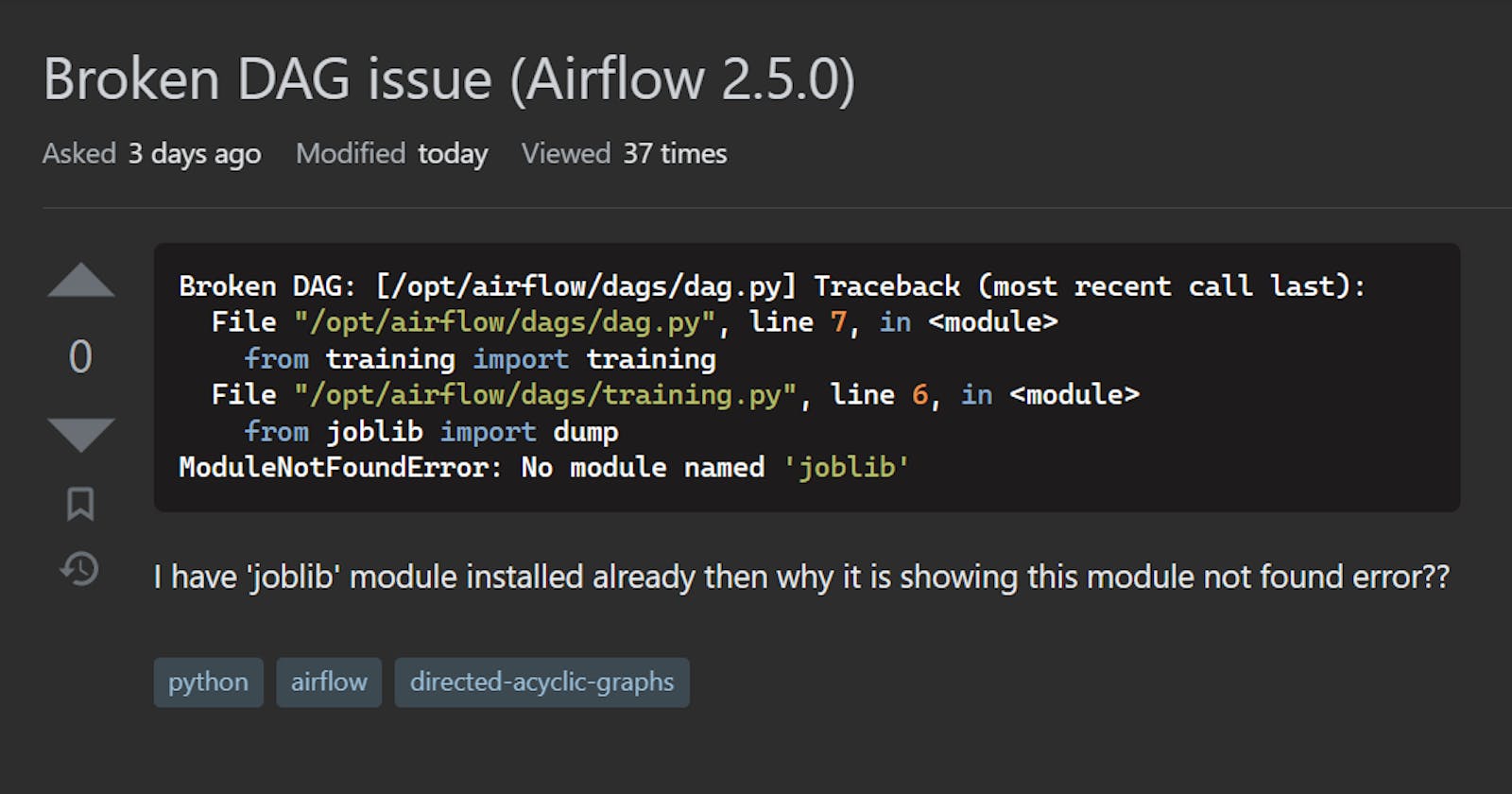

Broken DAG: [/opt/airflow/dags/dag.py] Traceback (most recent call last):

File "/opt/airflow/dags/dag.py", line 7, in <module>

from training import training

File "/opt/airflow/dags/training.py", line 6, in <module>

from joblib import dump

ModuleNotFoundError: No module named 'joblib'

Here, I have installed the 'joblib' library in my virtualenv and also kept this lib in requirements.txt but still getting this error. Why????

Temporary Answer :-

Because your airflow isn't able to configure this library, to get it configured you need to specify this library in docker-compose.yml file inside the 'environment' tag under '_PIP*_*ADDITIONAL_REQUIREMENTS: ${}'.

_PIP_ADDITIONAL_REQUIREMENTS: ${_PIP_ADDITIONAL_REQUIREMENTS:- joblib==1.2.0}

Download the docker-compose.yml file from here:- docker-compose.yml

For more information check this link:- env-variables-docker-compose

after adding the library to the docker-compose.yml file you need to do below things:-

docker-compose restart

if changes do not reflect by the above command then use the below command:-

docker-compose up

This will install all the required python lib(S) along with Airflow services.

Best Anwer :-

The below link will take you to the discussion section...

if not going through the above link then do the below things...

# In order to add custom dependencies or upgrade provider packages you can use your extended image.

# Comment the image line, place your Dockerfile in the directory where you placed the docker-compose.yaml

# and uncomment the "build" line below, Then run `docker-compose build` to build the images.

image: ${AIRFLOW_IMAGE_NAME:-apache/airflow:|version|}

build: .

Now, run docker compose up --build as a shortcut if you do not want to run docker compose build separately.

>>> docker compose up --build